sklearn.model_selection.TunedThresholdClassifierCV¶

- class sklearn.model_selection.TunedThresholdClassifierCV(estimator, *, scoring='balanced_accuracy', response_method='auto', thresholds=100, cv=None, refit=True, n_jobs=None, random_state=None, store_cv_results=False)[source]¶

Classifier that post-tunes the decision threshold using cross-validation.

This estimator post-tunes the decision threshold (cut-off point) that is used for converting posterior probability estimates (i.e. output of

predict_proba) or decision scores (i.e. output ofdecision_function) into a class label. The tuning is done by optimizing a binary metric, potentially constrained by a another metric.Read more in the User Guide.

Added in version 1.5.

- Parameters:

- estimatorestimator instance

The classifier, fitted or not, for which we want to optimize the decision threshold used during

predict.- scoringstr or callable, default=”balanced_accuracy”

The objective metric to be optimized. Can be one of:

a string associated to a scoring function for binary classification (see model evaluation documentation);

a scorer callable object created with

make_scorer;

- response_method{“auto”, “decision_function”, “predict_proba”}, default=”auto”

Methods by the classifier

estimatorcorresponding to the decision function for which we want to find a threshold. It can be:if

"auto", it will try to invoke, for each classifier,"predict_proba"or"decision_function"in that order.otherwise, one of

"predict_proba"or"decision_function". If the method is not implemented by the classifier, it will raise an error.

- thresholdsint or array-like, default=100

The number of decision threshold to use when discretizing the output of the classifier

method. Pass an array-like to manually specify the thresholds to use.- cvint, float, cross-validation generator, iterable or “prefit”, default=None

Determines the cross-validation splitting strategy to train classifier. Possible inputs for cv are:

None, to use the default 5-fold stratified K-fold cross validation;An integer number, to specify the number of folds in a stratified k-fold;

A float number, to specify a single shuffle split. The floating number should be in (0, 1) and represent the size of the validation set;

An object to be used as a cross-validation generator;

An iterable yielding train, test splits;

"prefit", to bypass the cross-validation.

Refer User Guide for the various cross-validation strategies that can be used here.

Warning

Using

cv="prefit"and passing the same dataset for fittingestimatorand tuning the cut-off point is subject to undesired overfitting. You can refer to Consideration regarding model refitting and cross-validation for an example.This option should only be used when the set used to fit

estimatoris different from the one used to tune the cut-off point (by callingTunedThresholdClassifierCV.fit).- refitbool, default=True

Whether or not to refit the classifier on the entire training set once the decision threshold has been found. Note that forcing

refit=Falseon cross-validation having more than a single split will raise an error. Similarly,refit=Truein conjunction withcv="prefit"will raise an error.- n_jobsint, default=None

The number of jobs to run in parallel. When

cvrepresents a cross-validation strategy, the fitting and scoring on each data split is done in parallel.Nonemeans 1 unless in ajoblib.parallel_backendcontext.-1means using all processors. See Glossary for more details.- random_stateint, RandomState instance or None, default=None

Controls the randomness of cross-validation when

cvis a float. See Glossary.- store_cv_resultsbool, default=False

Whether to store all scores and thresholds computed during the cross-validation process.

- Attributes:

- estimator_estimator instance

The fitted classifier used when predicting.

- best_threshold_float

The new decision threshold.

- best_score_float or None

The optimal score of the objective metric, evaluated at

best_threshold_.- cv_results_dict or None

A dictionary containing the scores and thresholds computed during the cross-validation process. Only exist if

store_cv_results=True. The keys are"thresholds"and"scores".classes_ndarray of shape (n_classes,)Classes labels.

- n_features_in_int

Number of features seen during fit. Only defined if the underlying estimator exposes such an attribute when fit.

- feature_names_in_ndarray of shape (

n_features_in_,) Names of features seen during fit. Only defined if the underlying estimator exposes such an attribute when fit.

See also

sklearn.model_selection.FixedThresholdClassifierClassifier that uses a constant threshold.

sklearn.calibration.CalibratedClassifierCVEstimator that calibrates probabilities.

Examples

>>> from sklearn.datasets import make_classification >>> from sklearn.ensemble import RandomForestClassifier >>> from sklearn.metrics import classification_report >>> from sklearn.model_selection import TunedThresholdClassifierCV, train_test_split >>> X, y = make_classification( ... n_samples=1_000, weights=[0.9, 0.1], class_sep=0.8, random_state=42 ... ) >>> X_train, X_test, y_train, y_test = train_test_split( ... X, y, stratify=y, random_state=42 ... ) >>> classifier = RandomForestClassifier(random_state=0).fit(X_train, y_train) >>> print(classification_report(y_test, classifier.predict(X_test))) precision recall f1-score support 0 0.94 0.99 0.96 224 1 0.80 0.46 0.59 26 accuracy 0.93 250 macro avg 0.87 0.72 0.77 250 weighted avg 0.93 0.93 0.92 250 >>> classifier_tuned = TunedThresholdClassifierCV( ... classifier, scoring="balanced_accuracy" ... ).fit(X_train, y_train) >>> print( ... f"Cut-off point found at {classifier_tuned.best_threshold_:.3f}" ... ) Cut-off point found at 0.342 >>> print(classification_report(y_test, classifier_tuned.predict(X_test))) precision recall f1-score support 0 0.96 0.95 0.96 224 1 0.61 0.65 0.63 26 accuracy 0.92 250 macro avg 0.78 0.80 0.79 250 weighted avg 0.92 0.92 0.92 250

Methods

Decision function for samples in

Xusing the fitted estimator.fit(X, y, **params)Fit the classifier.

Get metadata routing of this object.

get_params([deep])Get parameters for this estimator.

predict(X)Predict the target of new samples.

Predict logarithm class probabilities for

Xusing the fitted estimator.Predict class probabilities for

Xusing the fitted estimator.score(X, y[, sample_weight])Return the mean accuracy on the given test data and labels.

set_params(**params)Set the parameters of this estimator.

set_score_request(*[, sample_weight])Request metadata passed to the

scoremethod.- property classes_¶

Classes labels.

- decision_function(X)[source]¶

Decision function for samples in

Xusing the fitted estimator.- Parameters:

- X{array-like, sparse matrix} of shape (n_samples, n_features)

Training vectors, where

n_samplesis the number of samples andn_featuresis the number of features.

- Returns:

- decisionsndarray of shape (n_samples,)

The decision function computed the fitted estimator.

- fit(X, y, **params)[source]¶

Fit the classifier.

- Parameters:

- X{array-like, sparse matrix} of shape (n_samples, n_features)

Training data.

- yarray-like of shape (n_samples,)

Target values.

- **paramsdict

Parameters to pass to the

fitmethod of the underlying classifier.

- Returns:

- selfobject

Returns an instance of self.

- get_metadata_routing()[source]¶

Get metadata routing of this object.

Please check User Guide on how the routing mechanism works.

- Returns:

- routingMetadataRouter

A

MetadataRouterencapsulating routing information.

- get_params(deep=True)[source]¶

Get parameters for this estimator.

- Parameters:

- deepbool, default=True

If True, will return the parameters for this estimator and contained subobjects that are estimators.

- Returns:

- paramsdict

Parameter names mapped to their values.

- predict(X)[source]¶

Predict the target of new samples.

- Parameters:

- X{array-like, sparse matrix} of shape (n_samples, n_features)

The samples, as accepted by

estimator.predict.

- Returns:

- class_labelsndarray of shape (n_samples,)

The predicted class.

- predict_log_proba(X)[source]¶

Predict logarithm class probabilities for

Xusing the fitted estimator.- Parameters:

- X{array-like, sparse matrix} of shape (n_samples, n_features)

Training vectors, where

n_samplesis the number of samples andn_featuresis the number of features.

- Returns:

- log_probabilitiesndarray of shape (n_samples, n_classes)

The logarithm class probabilities of the input samples.

- predict_proba(X)[source]¶

Predict class probabilities for

Xusing the fitted estimator.- Parameters:

- X{array-like, sparse matrix} of shape (n_samples, n_features)

Training vectors, where

n_samplesis the number of samples andn_featuresis the number of features.

- Returns:

- probabilitiesndarray of shape (n_samples, n_classes)

The class probabilities of the input samples.

- score(X, y, sample_weight=None)[source]¶

Return the mean accuracy on the given test data and labels.

In multi-label classification, this is the subset accuracy which is a harsh metric since you require for each sample that each label set be correctly predicted.

- Parameters:

- Xarray-like of shape (n_samples, n_features)

Test samples.

- yarray-like of shape (n_samples,) or (n_samples, n_outputs)

True labels for

X.- sample_weightarray-like of shape (n_samples,), default=None

Sample weights.

- Returns:

- scorefloat

Mean accuracy of

self.predict(X)w.r.t.y.

- set_params(**params)[source]¶

Set the parameters of this estimator.

The method works on simple estimators as well as on nested objects (such as

Pipeline). The latter have parameters of the form<component>__<parameter>so that it’s possible to update each component of a nested object.- Parameters:

- **paramsdict

Estimator parameters.

- Returns:

- selfestimator instance

Estimator instance.

- set_score_request(*, sample_weight: bool | None | str = '$UNCHANGED$') TunedThresholdClassifierCV[source]¶

Request metadata passed to the

scoremethod.Note that this method is only relevant if

enable_metadata_routing=True(seesklearn.set_config). Please see User Guide on how the routing mechanism works.The options for each parameter are:

True: metadata is requested, and passed toscoreif provided. The request is ignored if metadata is not provided.False: metadata is not requested and the meta-estimator will not pass it toscore.None: metadata is not requested, and the meta-estimator will raise an error if the user provides it.str: metadata should be passed to the meta-estimator with this given alias instead of the original name.

The default (

sklearn.utils.metadata_routing.UNCHANGED) retains the existing request. This allows you to change the request for some parameters and not others.Added in version 1.3.

Note

This method is only relevant if this estimator is used as a sub-estimator of a meta-estimator, e.g. used inside a

Pipeline. Otherwise it has no effect.- Parameters:

- sample_weightstr, True, False, or None, default=sklearn.utils.metadata_routing.UNCHANGED

Metadata routing for

sample_weightparameter inscore.

- Returns:

- selfobject

The updated object.

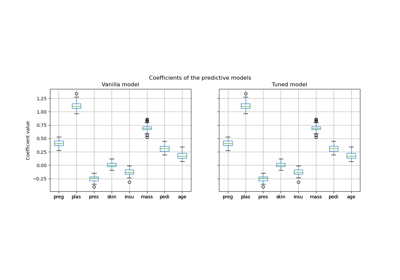

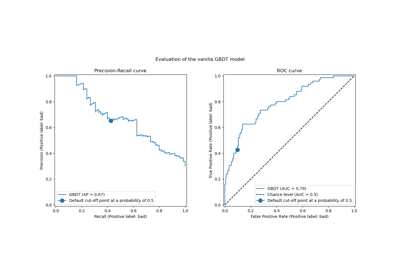

Examples using sklearn.model_selection.TunedThresholdClassifierCV¶

Post-hoc tuning the cut-off point of decision function

Post-tuning the decision threshold for cost-sensitive learning